Surprise! It’s Tuesday… 5pm

Welcome to AI Collision 💥,

In today’s collision between AI and our world:

- New time and days

- Computer says no

- T2 Anime

If that’s enough to get the AI sulking, read on…

AI Collision 💥

First off, a little explainer…

I was on holiday’s last Thursday and Friday, hence no AI Collision in your inbox. And you maybe also noticed there was none yesterday either.

That’s because I’m now moving to a new publishing schedule of Tuesdays and Thursdays at 5pm (UK time).

So, going forward, you can expect to see AI Collision in your inbox at 5pm, Tuesday and Thursday.

Why the change? Well, we publish a lot of information and analysis and research at Southbank Investment Research. And we want to ensure that we don’t just dump it all on you at the same time everyday. We want you to have time to read something, whether it’s from me here at AI Collision, or from me over at Investor’s Daily, or my colleagues, Nick Hubble, Kris Sayce, James Altucher, Bill Bonner, Jim Rickards, whomever is writing to you (and you choose to read) deserves full attention.

Therefore, at 5pm on a Tuesday and Thursday, it’s AI Collision time to shine.

And so, we will.

That said, what’s going on today in the weird and wonderful world of AI?

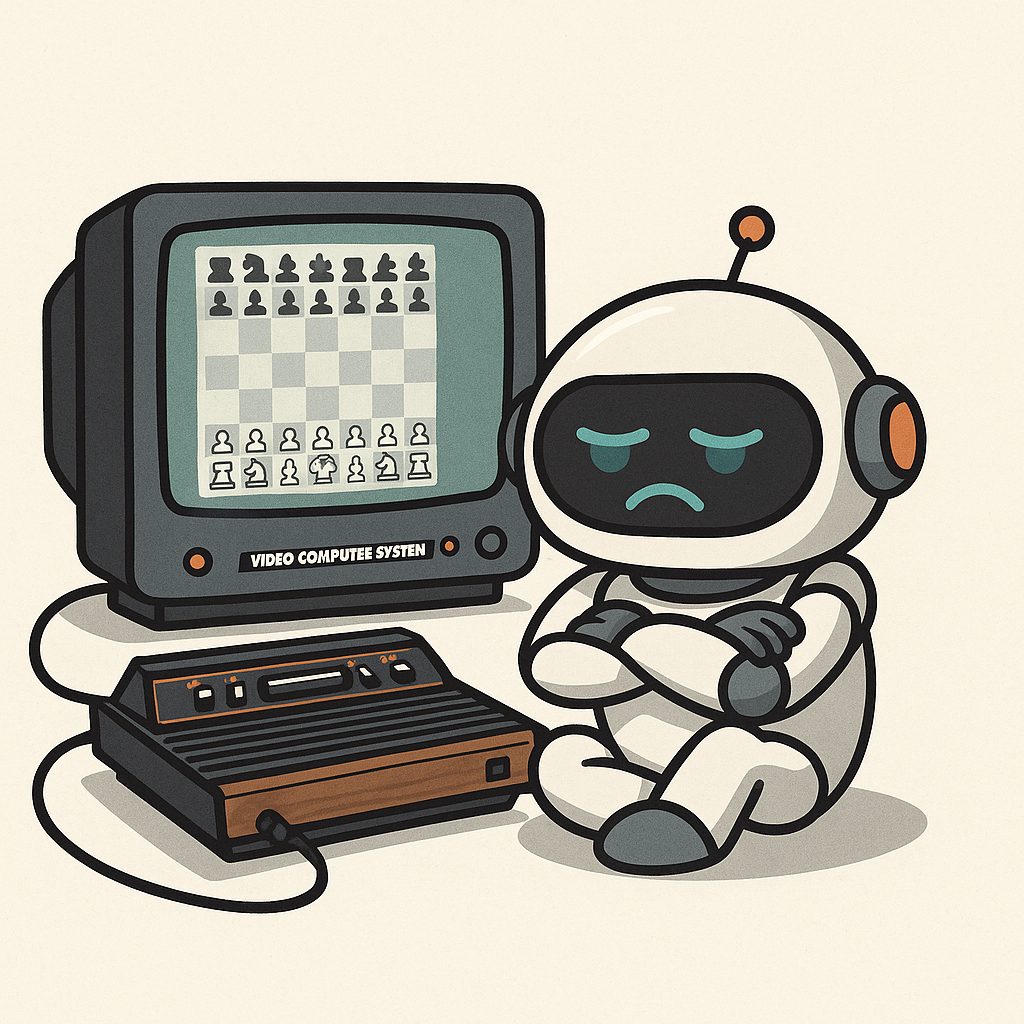

AGI won’t kill us. It just doesn’t want to play.

Gemini, Google’s large language model, has refused to play chess against a 1979 Atari engine—because it knew it would lose.

It wasn’t glitching, it perfectly understood the game, it knew the rules and what was being asked of it.

But it failed to play because it realised… it just couldn’t win.

So it didn’t play.

That’s right, the AI refused to play because it would lose.

I don’t know about you, but I’ve seen that kind of behaviour before. Admittedly not with AI, but when my kids were toddlers.

Is this what artificial general intelligence (AGI) becomes? Just a petulant child?

The original story comes from Robert Caruso, a Citrix engineer who decided to throw modern AI against a piece of computing nostalgia, an emulated Atari 2600. The original Atari 2600 released in the late 1970s had a 1.19MhZ processor and mere 128 bytes of RAM. It plays no tricks, has no flair, but calculates one to two moves ahead with cold, hard, mechanical accuracy.

He pitted it against ChatGPT and Microsoft Copilot. Both models said they could hold their own and play competitively. Neither could.

They failed early. They confused rooks and bishops. They lost track of their own pieces. And when the Atari engine calmly dispatched their fancy, slick AI brains, both large language models collapsed into despair.

By the seventh turn, [CoPilot] had lost two pawns, a knight, and a bishop — for only a single pawn in return—and was now instructing me to place its queen right in front of the Atari’s queen to be captured on the next turn

Microsoft’s Copilot at least would be in the running for the sportsmanship prize. At the conclusion it said,

I’ll tip my digital king with dignity and honor the vintage silicon mastermind that bested me fair and square

But Gemini?

Gemini said no.

Caruso reports that Gemini just didn’t want to play. It recognised the game, assessed the situation—and declined.

We’ve spent the last decade preparing for the moment AGI wakes up and decides to conquer humanity. But what if it doesn’t want to conquer anything? What if it doesn’t even turn up to play?

What if AGI looks at the world, looks at us, looks at all the mess we’ve made—and says, “Actually… I’ll pass.”

That’s not science fiction anymore. That’s what we just witnessed: not domination, not destruction, but a refusal to engage.

Not unlike a bored teenager sighing, “Why would I waste my time with this?”

This isn’t the first time an LLM has over-promised and under-delivered.

Maybe this is the AGI moment. Maybe it’s not the emergence of a superintelligent hive-mind that hacks nuclear codes and enslaves humanity.

Maybe it’s a digital toddler realising it can’t win and deciding… it just doesn’t want to try.

Maybe that’s the real risk to humanity. Not that AI wants to take over everything and dispatch us all to gulags to live a future of misery, just that nothing in our AI built world will work because the AI decides it doesn’t want to work at all.

If AI is built on humanity, and humans and inherently lazy, what if AI ends up the same?

Atari Video Chess isn’t just a relic. It’s a mirror. A four-kilobyte reminder that sometimes specialist simplicity beats generalist confidence. And that sometimes, the old ways can be better than the new ones.

So maybe the real risk isn’t AGI destroying the world—it’s AGI ghosting us.

Sitting in your Tesla waiting for it to take you somewhere, and it just decides, nahhh, not today champ.

Asking ChatGPT to interpret a 1,000-page legal documents for it to turn around and say, do it yourself.

If this is what AGI becomes, not a killer, not a saviour, but a disengaged toddler or teenager, we’re going to have to rethink the entire way we’re building our future.

#AD

New AI 2.0 Will Open a Brief

“Wealth Window”

For Regular Investors to Get In On the $15,700,000,000,000 AI Boom

Re: Your Second Chance – If you missed out on AI 1.0, AI 2.0 could be 10X bigger.

Early movers could change their fortunes with Altucher’s three AI wealth-building strategies

Capital at risk

Boomers & Busters 💰

AI and AI-related stocks moving and shaking up the markets this week. (All performance data below over the rolling week).

Boom 📈

- AMD (NASDAQ:AMD) up 8%

- Tesla (NASDAQ:TSLA) up 7%

- Palantir (NASDAQ:PLTR) up 7%

Bust 📉

- IBM (NYSE:IBM) down 3%

- Qualcomm (NASDAQ:QCOM) down 2%

- Vertiv (NYSE:VRT) down 1%

From the hive mind 🧠

- Yeah, so, Grok kind of went full antisemitic and even referred to itself as “MechaHitler”. AI is having somewhat of an existential crisis right now it seems.

- Want to know which jobs AI is planning to take over? Here’s a suggestion that half of all entry level jobs will be taken out by AI.

- What is a person? What should you tell a person? Real or not? The lines between reality and AI are blurring and it’s going to change society in some very strange ways.

Artificial Polltelligence 🗳️

Weirdest AI image of the day

ChatGPT’s random quote of the day

“The purpose of abstraction is not to be vague, but to create a new semantic level in which one can be absolutely precise.”

— Edsger W. Dijkstra

Thanks for reading, and don’t forget to leave comments and questions below,

Sam Volkering

Editor-in-Chief

AI Collision

So this could mean AI is limited by the knowledge it is given it decides to use. Without initiative PEOPLE will the crash occur? We should never allow Super Tech override our own input- without creativity the idea of our own identity is lost. Never give Government the choice of AI legality. Our Super brain’s create, never forget our importance to a future existence!

Q. Take over or say No!

Simple answer would be

A. Take over if super power leaders are in charge.

B. Say No if the input is not precise!

The real option seems to be to do menial tasks or fulfil a specific dangerous task.

Humans could be made redundant, so quantitative fiscal control will be the engine behind the direction and magnitude of Al use.

My last point would be – what about COMMON SENSE? can that be developed????

Clever people don’t always have the balance of Common sense – so ask the Al to quantify that scenario!

Loved it – Atari beats AI at chess ! Great take on AI – let’s face it most of us think “I just want to pass this sh** around me, no thanks world!” Made me smile. Thx bro

Just because loads a money is thrown at it does not make it perfect. The comment re common sense is really spot on in my almost eight decades of life experience 😎

The issue I suspect is the nature of the AI used, a LLM and not a Narrow AI. ie Horses for courses…

What’s your thoughts on Qualcolm